Regardless of your political affiliation, we can admit that we’re all a little fed up with the spread of misinformation in just about every social and news media outlet. Unfortunately, technological advances make it easier for perpetrators to spread lies, deceive potential voters, and influence public opinion.

Many of the tricks used are familiar and easy to navigate. Like, we all know that media outlets are biased and that everything should be double checked. But, technology has created some new challenges, ones that seniors and caregivers mightn’t be familiar with.

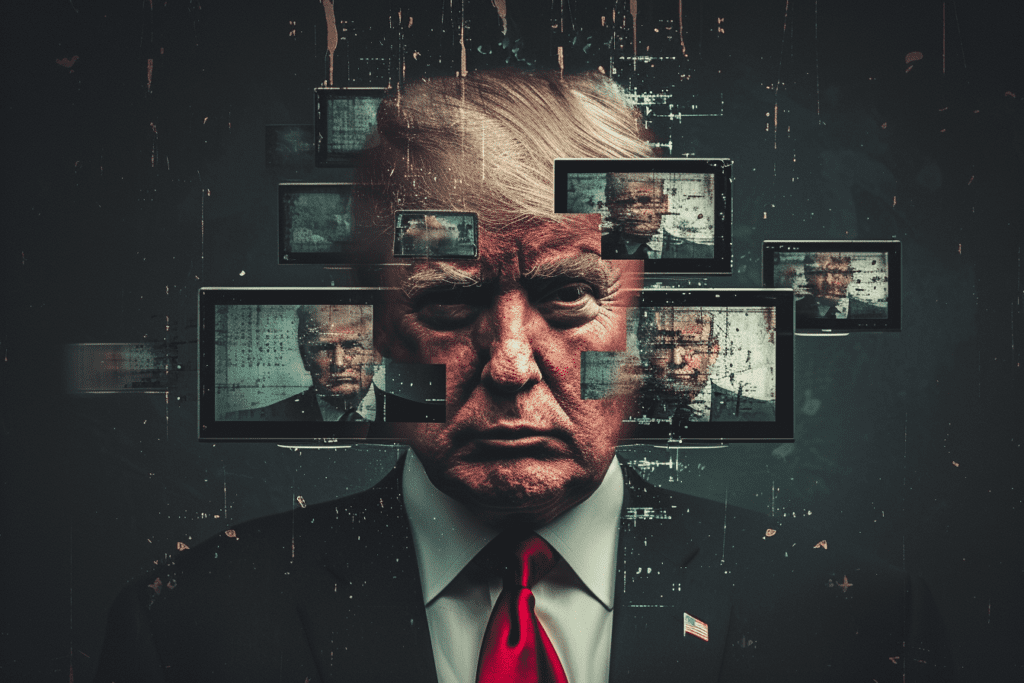

Today we’re looking at one of these – deepfakes.

Deepfakes are the latest menace to our democracy and elections. They are compelling videos, photos, or audio recordings created using artificial intelligence and deep learning algorithms. These manipulations are so advanced that they can make it appear as though someone is saying or doing something they never did.

The video below shows what deepfakes look like and how they work.

Here’s another example:

As you can see, deepfakes can be surprisingly convincing, especially when viewers don’t know to look for them. The technology is also rapidly improving, meaning deepfakes may become more realistic and harder to spot as time goes on.

In this article, we delve into what deepfakes are, the dangers they pose to seniors, and practical steps seniors can take to protect themselves in a politically charged atmosphere.

How Deepfakes Can Have Dramatic Political Impacts

They Spread Misinformation

Deepfake technology poses a significant threat to the integrity of elections. For example, they can be weaponized to manipulate seniors’ interpretation of political ads.

The ads may mimic a candidate and make it appear like they said or did something they never did. Seniors, who may rely heavily on traditional media sources, such as local broadcasting, can be particularly susceptible. What’s worse is that if the political ad triggers them, they may go on to share this with friends and family without providing much context. It’s a vicious cycle.

They Can Lead to Voter Suppression

Deepfakes can also discourage seniors from voting. Fake videos or audio recordings may spread false information about voting procedures, polling places, or candidates, dissuading seniors from participating in the democratic process. You may hear your favorite candidate on a robocall or viral video alerting you that polls will stay open until midnight.

You wouldn’t pause and question whether it was legit. So you decide to wait until the last minute to head to the polls only to find they’ve closed. Or the deepfake may warn that additional documents are required to vote causing voters polls to stay home out of concern that they lack this paperwork.

The targets of AI-generated deepfake cut across party lines. Already, robocalls aren’t wasting any time influencing the upcoming elections. Perpetrators used the deepfake technology to imitate President Joe Biden’s voice, asking voters not to vote in the New Hampshire primary and suggesting they save their vote for the November election. It is frightening how convincing the voice is and can easily confuse voters.

When in doubt, check this US government site to verify requirements for voting and find the correct information for your local or state election office.

They Can Impact Reputations

Deepfakes can be used to create fake videos or audio recordings of political candidates engaging in unethical or illegal activities. For instance, a deepfake video could depict a candidate accepting a bribe, making racist remarks, or engaging in other damaging behavior.

These fabricated scandals can be circulated on social media and news platforms, damaging a candidate’s reputation and potentially swinging the election in favor of their opponent. Even if the deepfake is later debunked, the damage to the candidate’s image may already be done.

Take, for instance, AI-generated photos that spread like wildfire throughout social media, depicting President Donald Trump seemingly trying to break free from New York police officers ahead of his indictment. Other fake images pictured him crying in court.

The AI dangers cut across party lines.

Deepfake technology has been used to manipulate the speeches and statements of political leaders. A deepfake could make a candidate appear to be making a concession speech or endorsing their opponent, leading voters to believe a false narrative about the candidate’s intentions. The faux videos or likeness can create confusion and sow discord among supporters, making it difficult for voters to make informed decisions.

There have been several instances where celebrities and well-known individuals have fallen victim to deepfake technology. One notable case involves comedian and actor Jordan Peele, who used deepfake technology to create a video that appeared to show former President Barack Obama delivering a fabricated speech.

In the video, Peele’s voice and facial expressions were superimposed onto Obama’s image, creating the former president’s convincing yet entirely fake appearance. Peele aimed to raise awareness about the potential misuse of deepfake technology for political manipulation and misinformation.

World leaders haven’t escaped deepfake either. North Korea’s leader, Kim Jong Un, was effectively used by a group, RepresentUs, to draw attention to our collapsing and fragile democracy.

The Kim Jong Un video included a brief disclaimer at the end, saying that “This footage is not real, but the threat is. Join us”. Despite that disclaimer, some YouTube commenters assumed the video was real.

They Include Fake Endorsements

Deepfakes can also fabricate endorsements from influential figures or organizations. For example, a deepfake video could show a celebrity endorsing a candidate they have never supported. Such endorsements can sway public opinion and garner support for a candidate based on a false premise, ultimately influencing election outcomes.

In all these cases, the ability of deepfake technology to create convincing and deceptive content makes it a potent tool for manipulating elections and misleading voters, undermining the democratic process and eroding trust in the electoral system. It highlights the importance of vigilance, fact-checking, and technological countermeasures to mitigate the impact of deepfakes on elections.

They Make Us Question Reality

So far, political deepfake disinformation campaigns are rare (although they are likely to occur and get more common as time goes on).

One of the biggest current impacts is that the potential for deepfakes makes people question their reality. You can no longer assume that a video or a photo is real. This may dissuade some people for voting entirely because they don’t know which information to trust.

Note: All of these issues are made worse by the fact that deepfakes are surprisingly easy to create. The technology for doing so continues to improve, while technology for deepfake detection is lagging behind.

How to Protect Seniors from Political Deepfake Threats

To protect themselves from political deepfake threats, seniors can take several proactive steps:

Use Trusted Sources

Seniors should rely on reputable sources and consult with certified financial professionals when considering investment opportunities or financial advice. Avoid making impulsive decisions based on videos or endorsements that appear suspicious.

In particular, be wary of any information from social media and YouTube. These are hotspots for fake information and deepfaked videos.

It’s also important to investigate any information that seems odd. Even reputable sources may occasionally get sucked into deepfakes as the technology improves, so it’s important to always be skeptical.

Watch Things Live

Deepfakes are generally created using recording images. It’s much harder to modify a live video and attempts to do so are likely to be very obvious. As such, watching live videos will always be more powerful.

Verify Information

Seniors should fact-check information presented in political ads and news stories. They should rely on reputable news sources and cross-reference information from multiple outlets.

This could include searching online for details about deepfakes, like “recent Biden deepfakes”. Doing so highlights whether there are any concerning videos or messages going around.

Be Critical Thinkers

Encourage seniors to think critically and question the authenticity of suspicious videos or audio recordings. Don’t take anything at face value.

Think about whether the video or audio is something the person is likely to say. If it’s a large break from form, like in the Jordan Peele Obama deepfake, the deception becomes much more obvious.

Critical thinking is especially important with politics, as we often have strong biases. It’s easy to buy into a video that appears to confirm your assumptions.

Report Suspected Deepfakes

Seniors should report any suspected deepfake content to relevant authorities, such as election officials or social media platforms. Timely reporting can help mitigate the spread of misinformation.

If you’ve spotted it, others are likely suspicious, too. Putting the spotlight on the suspicious message or messenger can make them second-guess this strategy and put others on the alert.

This is especially important if a potential deepfake relating to local elections. While deepfakes of Biden, Obama, and celebrities hit the news quickly, deepfakes of less well known politicians (including local politicians) receive less airtime, so they can remain undiscovered for longer.

Is the Law on Our Side?

Many social media outlets, such as YouTube, X/Twitter, and Facebook, have laid off content moderators used to identify and remove suspicious content, including those making false claims about who won the last election. It puts the onus on viewers and readers to discern what’s real. The accuracy of AI-generated images, audio, and video puts us all in a vulnerable position to question any and all sources.

Some platforms will include a tag that tells users that ‘fact checking is needed’ or that ‘this information is possibly misleading’. However, not all deepfakes are tagged this way. It’s easy for some to get missed, as was the case with AI generated images of Trump being arrested.

Many county officials are taking things into their own hands and investing in campaigns to educate the public about this threat.

Various countries, including the United States, have been actively exploring legislation to protect U.S. citizens against AI’s impact, including tempering their effects on the upcoming elections. It’s important to know that while several bills have been introduced, nothing has been passed.

Thankfully, there is at least bipartisan engagement to fight the dangers of AI. Senators Chuck Schumer (D-NY) and Mike Rounds (R-SD) have hosted several forums with experts, including Bill Gates, Elon Musk, and Mark Zuckerberg, to discuss the widespread impact of AI.

Senators Amy Klobuchar (D-MN), Cory Booker (D-NJ) and Michael Bennet (D-CO) introduced the REAL Political Ads Actthat would require disclosure on the use of AI in political ads.

In what is known as the DEFIANCE Act, several Senators – (Dick Durbin, D-IL; Lindsey Graham, R-SC; Amy Klobuchar, D-MN, and Josh Hawley, R-MO) have banded together to pass a bill that would allow victims to sue individuals who used their image and likeness in sexually explicit content. It’s an important start.

In addition, Rep. Yvette Clarke (D-NY) introduced a bill in September of 2023, (H.R. 5586), the DEEPFAKES Accountability Act that would criminalize the use of deepfakes that threaten our national security.

In October 2022, the White House Office of Science and Technology Policy (OSTP) created the Blueprint for an AI Bill of Rights to outline best practices and protections for consumers and the public. Unfortunately, none of it is presently enforceable.

What gave me great hope was listening to the bipartisan tongue-lashing of social media kings on January 31st, 2024, which included the CEO’s of X / Twitter, Meta, Discord, Snapchat, Instagram, and TikTok. I listened to the 4-hour-long congressional hearing, which amounted to a scrutiny of these giants where they were blamed for their failure to secure the safety of children online, leading to unprecedented levels of child exploitation and suicide. Congress pressured them to commit to better enforcements and warned that they were no longer trusted to police themselves and intended to revoke their current immunity to liability. This will undoubtedly lead to better enforcement in other areas that protect all consumers.

AI deep fakes have the potential to ruin reputations fast, and no one is more sensitive to their reputations than Hollywood stars. The use of actors’ images or likenesses without compensation was among the essential items that actors protected, and the Screen Actors Guild (SAG) had to address it to end the 100-day-long strike in 2023.

Actress Scarlett Johansson started to level the playing field by suing those who used her likeness.

Conclusion

As deepfake technology continues to evolve, seniors must be vigilant in protecting themselves from financial fraud and political manipulation. By understanding the dangers of deepfakes and taking proactive measures to verify information, seniors can make informed decisions when flexing their rights in the political process.

Seniors must remain informed and cautious in the face of these emerging threats to ensure the integrity of our democratic system.

The most important thing is simply being aware that videos, photos, and audio can now be faked, with increasing realism. This means that everything we see and here needs to be considered more carefully than ever before.

Leave a Reply